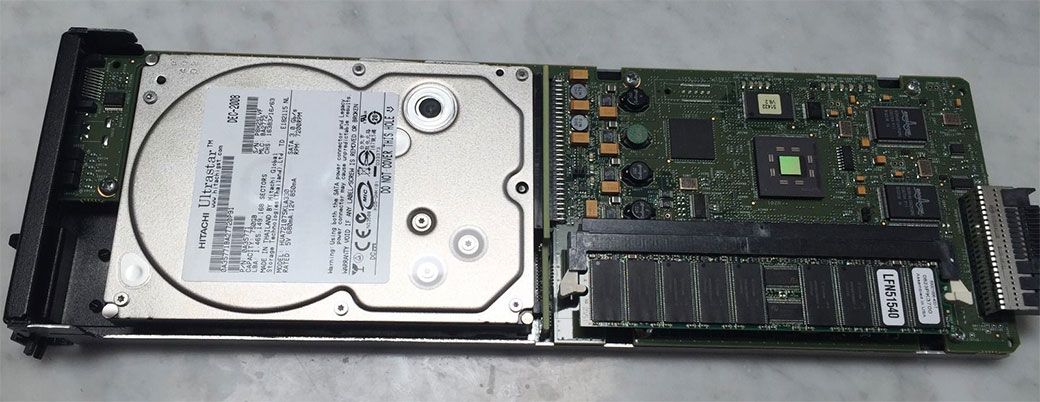

In 2006, I was invited into a meeting room at AC Nielsen where I was consulting at the time. Nielsen had just purchased their first Netezza system. I believe Mark Jeske was Netezza’s first sales engineer, a role I would later play myself at IBM. They were on their mustang generation system, and their introduction at the time involved a presentation of the architecture, as well as handing out a SPU; a parallel processing slice made of 1 disk attached to an FPGA and a CPU.

This was a brand new custom architecture with custom hardware packaged very cleverly to address the challenge of dealing with large amounts of data and data warehouses. I was to be the first developer on Nielsen’s first rack purchased; something they went on to purchase hundreds of racks later to really transform their business. The system had 112 of the slices above, 108 were active. I didn’t have to look that up, I remember that from this conversation; because most of what I heard was this: “We just bought a baby supercomputer – you’re going to be the one that gets to use it.” I was super excited – moreover than that, the system completely delivered. There were queries that on my prior project were taking 15 minutes to complete on a regular Oracle system; they would complete in 2 seconds on this magic Netezza box. I was literally smiling all the time working on this thing – it was super fun to work on!

I worked with Netezza on and off as it evolved from there to be even more powerful and more clever, in TwinFin, Striper, and Mako configurations; wearing all hats around it from data modeler, ETL designer, report developer, sales engineer, and DBA. It’s truly a system I have enjoyed working on, and I absolutely think it outshines its peers. Unlike it’s competitors who were traditional databases “appliantized” to try and work as quickly and cleverly as Netezza, and as easily – Netezza itself was always built ground up from day one to be an appliance database that excelled at working with large amounts of data and data warehouse workloads. Its simplicity simply could not be touched, and it’s clever architecture was it’s own. While a sales engineer, I could compete on site as 1 rep making simple design choices while someone like Oracle could show up with a RAC Exadata system and 3 Oracle Ace ninja sales DBAs. What I could accomplish with ease over a coffee, these guys could use their expertise to compete, but how many of those ninjas were on anyone’s staff? I didn’t become a sales engineer for Netezza because they were the only guys offering – I became one because they were the best.

Why all this backstory on Netezza? Partly to establish my credibility – but here’s the real reason: there hasn’t been a database I’ve been so excited about since Netezza than Snowflake db. This database is awesome. Per wiki it’s had a unicorn valuation, which seems in part because of the management involved. I’m here to say from a data warehousing perspective, I think this database is the real deal and provides an amazing platform.

Here’s why I got excited:

- Snowflake was designed from the bottom up for the cloud. A lot of competing cloud databases are actually other databases “cloud-enabled” – which can bring plenty of good stuff, but also burdens them with historical architecture or complexity that inhibits optimal performance on a cloud environment. This absolutely made a difference for Netezza vs. its competitors and it will for Snowflake as well.

- The ability to scale compute up or down – as well as turn it off – and is an awesome feature. It’s also a very easy to do; it appears to take effect right after any ongoing queries are completed.

- The ability to scale bottlenecks of the architecture independently is awesome.

- Are your queries more compute intensive? Then you can create larger compute nodes. Since the data is stored columnar compressed, I think you use some more CPU resources than many db architectures, but the trade off is faster reads. In any case, if you want your query faster, you can scale up the compute node size. This is vertical scaling to decrease atomic query speed.

- Do you have a large amount users who generate a lot of queries; is your warehouse highly concurrent? Concurrency goes down as atomic query speed declines, but if you need to scale out – you can also scale horizontally; clusters of your nodes can be dynamically added per your settings as workloads grow.

- I’m unclear on how read and i/o resources scale up for larger amounts of data, although by the columnar nature of the storage I suspect the query burden is more compute intensive than read on this platform vs. many databases. Does a larger node just give you more computer,

or more i/o bandwidth as well? I don’t know. I do not separate compute nodes/virtual warehouses have completely separate i/o resources however – so if one warehouse is doing a full table scan of a 10 TB table, another separate warehouse hitting the exact same table will suffer nothing at all!

- Netezza has few knobs to turn; but following best practices for database design is going to help you. Following Netezza best practices is going to help you as well; and so is writing sql to mostly cleverly use its architecture. Snowflake is amazingly even more simple – there’s even fewer knobs, less to tune but also less to go wrong as well. In my sales engineer career, I’d show up sometimes to upset customers who complained the database got so much slower than in the proof of concept; often times this would be ignoring very simple best practices to follow, resulting in things like full table scans of a 1 TB table or poor distribution keys resulting in a lot of query time data movement. Very simple changes would bring 90 second queries down to 1 second ones to remedy these situations; the problem was that despite these practices being simple – DBAs and developers simply didn’t bother to learn them or implement them. Snowflake addresses this problem by simply offering up less things for resources to ignore.

- Due to the segregation of storage and virtual warehouse compute, it’s entirely possible to architect solutions that separate light users from power users, or bad actors vs. others; I have seen system where *everyone* is a power user (my definition of which is direct SQL access) – when not-that-power-a-user executes a runaway query, cross join, etc. that’s simply wasteful – everyone suffers on a traditional database where these resources are shared across the userbase. This pain is much more compartmentalized on Snowflake within just the virtual warehouse compute the bad actor is running within.

- It appears cost is tracked and summarized, at least easily today, at a virtual warehouse level – I think just as interesting (if not moreso for me as a DBA geek) would be seeing it at a user level. I have some SAS users writing horrifically inefficient stuff that takes 10, 20+ minutes to run against Netezza; I’m not even a SAS guy, but have rewritten them within a day’s worth of effort to complete in 5 seconds – yet have difficulty training and convincing people to switch to improved techniques for working on a warehouse. With this chargeback accounting, it would be nice to be able to show the actual cost for these bad queries. Certainly this still pales in comparison to the opportunity cost of someone’s labor hours when they are wasting 20 minutes on something that should take 5 seconds as well.

- I love Netezza – but the barrier to entry is high; the platform is expensive. Snowflake? Organizations that we probably wouldn’t have started a conversation with about Netezza can afford to pick this database up! If you’ve got a 1 or 3 TB data warehouse on Oracle or SQL Server as an on premise solution, I think Snowflake is really making a very compelling argument to migrate the entire thing right on over to it.

So here’s a theoretical Snowflake db data warehouse:

- Large ETL morning batch run and burden begins at 5am. ETL compute node resumes and ramps up to large size state to get the processing done more quickly and have the data ready to go for 7am business day start time on the East Coast. Spin down to small size node for trickle and one-off ETLs that may happen to come in during the business day.

- Sales department is using Business Objects, Cognos, Tableau, whatever – against defined data marts with generated SQL queries from these tools. The sales department gets a medium sized set of nodes to handle the speed expected of the workload. Set it to grow from 1 up to 5 clusters dynamically to handle the concurrent workload as it rises and falls throughout the day. End of the month reporting? Size it up to large compute nodes until its complete!

- Power/SAS users Marketing coming in with heavy lifting required for queries get assigned a separate Marketing virtual warehouse with large sized compute, to match their SLA requirements for their workload.

As the system is elastic vertically and horizontally, it can handle all these needs and address them well; if not a 24 hour shop, the nodes can be paused once inactivity is encountered automatically, or from 8pm to 5am or some other interval. This solves a lot of the problems with my current database favorite of Netezza; there’s little practices for Snowflake users to not implement and suffer from as a result. The burden and requirements of departments and users can be completely segmented from eachother; not possible on a shared resource where good actors must suffer through the inefficiency of bad design or bad actors. All in all this is a really exciting database, and I expect a huge amount of growth and adoption of it going forward.

UPDATE: I’ve been playing with the database a bit now. Here’s some more on the Snowflake architecture.

Stay connected